mirror of

https://github.com/kairos-io/kairos.git

synced 2025-02-09 05:18:51 +00:00

Remove docs (#1415)

* remove docsy as submodule Signed-off-by: Mauro Morales <mauro.morales@spectrocloud.com> * remove docs Signed-off-by: Mauro Morales <mauro.morales@spectrocloud.com> --------- Signed-off-by: Mauro Morales <mauro.morales@spectrocloud.com>

This commit is contained in:

parent

38da073118

commit

fdea673d54

1

.github/workflows/image-arm.yaml

vendored

1

.github/workflows/image-arm.yaml

vendored

@ -7,7 +7,6 @@ on:

|

||||

pull_request:

|

||||

paths:

|

||||

- '**'

|

||||

- '!docs/**'

|

||||

|

||||

concurrency:

|

||||

group: ci-arm-${{ github.head_ref || github.ref }}-${{ github.repository }}

|

||||

|

||||

2

.github/workflows/image.yaml

vendored

2

.github/workflows/image.yaml

vendored

@ -5,11 +5,9 @@ on:

|

||||

- master

|

||||

paths:

|

||||

- '**'

|

||||

- '!docs/**'

|

||||

pull_request:

|

||||

paths:

|

||||

- '**'

|

||||

- '!docs/**'

|

||||

workflow_dispatch:

|

||||

inputs:

|

||||

immucore_dev:

|

||||

|

||||

3

.github/workflows/lint.yml

vendored

3

.github/workflows/lint.yml

vendored

@ -3,12 +3,9 @@ on:

|

||||

push:

|

||||

branches:

|

||||

- master

|

||||

paths:

|

||||

- '!docs/**'

|

||||

pull_request:

|

||||

paths:

|

||||

- '**'

|

||||

- '!docs/**'

|

||||

env:

|

||||

FORCE_COLOR: 1

|

||||

jobs:

|

||||

|

||||

42

.github/workflows/schema.yaml

vendored

42

.github/workflows/schema.yaml

vendored

@ -1,42 +0,0 @@

|

||||

name: Cloud Config Schema

|

||||

on:

|

||||

# To test with push events, it's easy to use a test branch e.g. schematest, you will also need to update the checkout ref and the create PR base

|

||||

# push:

|

||||

# branches:

|

||||

# - schematest

|

||||

push:

|

||||

tags:

|

||||

- v*

|

||||

jobs:

|

||||

generate-schema:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Checkout code

|

||||

uses: actions/checkout@v3

|

||||

with:

|

||||

ref: master

|

||||

fetch-depth: 0

|

||||

- name: setup-docker

|

||||

uses: docker-practice/actions-setup-docker@master

|

||||

- name: Install earthly

|

||||

uses: Luet-lab/luet-install-action@v1

|

||||

with:

|

||||

repository: quay.io/kairos/packages

|

||||

packages: utils/earthly

|

||||

- name: Generate cloud-config Schema

|

||||

run: |

|

||||

earthly +generate-schema

|

||||

- name: Change ownership

|

||||

# Chown files generated by earthly since they are owned by root

|

||||

run: sudo chown -R runner:docker docs/static/*

|

||||

- name: Create Pull Request

|

||||

uses: peter-evans/create-pull-request@v5

|

||||

with:

|

||||

token: ${{ secrets.PAT_TOKEN }}

|

||||

push-to-fork: ci-robbot/c3os

|

||||

base: master

|

||||

branch: cloud-config-schema-updates

|

||||

commit-message: ':book: Update Schema'

|

||||

title: ':book: Update Schema'

|

||||

body: Update latest cloud config schema release

|

||||

signoff: true

|

||||

13

.gitignore

vendored

13

.gitignore

vendored

@ -4,22 +4,9 @@

|

||||

/cli/cli

|

||||

/kairos

|

||||

/dist

|

||||

/docs/bin

|

||||

/docs/.hugo_build.lock

|

||||

/build

|

||||

docs/node_modules

|

||||

docs/public

|

||||

!docs/public/favicon

|

||||

!docs/public/CNAME

|

||||

docs/dist

|

||||

docs/.vscode

|

||||

coverage.out

|

||||

.DS_Store

|

||||

/docs/bin/

|

||||

/docs/public/

|

||||

/docs/resources/

|

||||

/docs/node_modules/

|

||||

/docs/tech-doc-hugo

|

||||

internal/webui/public/cypress/videos/

|

||||

|

||||

node_modules/

|

||||

|

||||

7

.gitmodules

vendored

7

.gitmodules

vendored

@ -1,7 +0,0 @@

|

||||

|

||||

[submodule "themes/docsy"]

|

||||

path = themes/docsy

|

||||

url = https://github.com/google/docsy

|

||||

[submodule "docs/themes/docsy"]

|

||||

path = docs/themes/docsy

|

||||

url = https://github.com/google/docsy.git

|

||||

@ -1 +0,0 @@

|

||||

kairos.io

|

||||

@ -1,18 +0,0 @@

|

||||

export HUGO_VERSION?=0.105.0

|

||||

export HUGO_PLATFORM?=Linux-64bit

|

||||

|

||||

export ROOT_DIR:=$(shell dirname $(realpath $(lastword $(MAKEFILE_LIST))))

|

||||

|

||||

.DEFAULT_GOAL := build

|

||||

|

||||

.PHONY: build

|

||||

build:

|

||||

scripts/build.sh

|

||||

|

||||

.PHONY: serve

|

||||

serve:

|

||||

scripts/serve.sh

|

||||

|

||||

.PHONY: publish

|

||||

publish:

|

||||

scripts/publish.sh

|

||||

@ -1,35 +0,0 @@

|

||||

# :book: Kairos documentation

|

||||

|

||||

The Kairos documentation uses [docsy](https://docsy.dev).

|

||||

|

||||

## Prerequisites

|

||||

|

||||

The following software is needed to preview the documentation changes locally.

|

||||

|

||||

* Hugo [v0.105.0+](https://gohugo.io/installation/)

|

||||

* nodeJs [v16+](https://nodejs.org/en/download/)

|

||||

|

||||

## Test your changes

|

||||

|

||||

After cloning the repo (with submodules), just run `make serve` to test the website locally.

|

||||

|

||||

```

|

||||

$> git clone --recurse-submodule https://github.com/kairos-io/kairos

|

||||

$> cd kairos/docs

|

||||

$> npm run prepare

|

||||

$> make serve

|

||||

```

|

||||

|

||||

If you have a local copy already checked out, sync the submodules:

|

||||

|

||||

```

|

||||

$> git submodule update --init --recursive --depth 1

|

||||

```

|

||||

|

||||

To run the website locally in other platforms, e.g. MacOS:

|

||||

|

||||

```

|

||||

$> HUGO_PLATFORM=macOS-64bit make serve

|

||||

```

|

||||

|

||||

**Note**: If the `make serve` command does not work for you, try to start hugo directly with the command `hugo server -D`.

|

||||

@ -1,21 +0,0 @@

|

||||

<svg width="347" height="125" viewBox="0 0 347 125" fill="none" xmlns="http://www.w3.org/2000/svg">

|

||||

<rect width="24.8171" height="24.8171" transform="matrix(-0.86601 0.500028 -3.18907e-05 1 64.6367 12.8008)" fill="#AEAEAE"/>

|

||||

<rect width="24.8171" height="24.8171" transform="matrix(-0.86601 -0.500028 3.18907e-05 1 43.1436 25.2109)" fill="#D6D6D6"/>

|

||||

<rect width="24.8171" height="24.8171" transform="matrix(-0.866041 0.499972 0.866041 0.499972 43.1436 0.394531)" fill="white"/>

|

||||

<rect width="24.8171" height="24.8171" transform="matrix(3.19859e-05 1 0.86601 0.500028 21.6487 37.6211)" fill="#D6D6D6"/>

|

||||

<rect width="24.8171" height="24.8171" transform="matrix(-0.866041 0.499973 0.866041 0.499972 21.6487 62.4336)" fill="white"/>

|

||||

<rect width="24.8171" height="24.8171" transform="matrix(-3.17834e-05 1 0.866009 -0.500028 0.157593 50.0312)" fill="#AEAEAE"/>

|

||||

<rect width="24.8171" height="24.8171" transform="matrix(-0.86601 0.500028 -3.19781e-05 1 64.6389 87.2539)" fill="#AEAEAE"/>

|

||||

<rect width="24.8171" height="24.8171" transform="matrix(-0.866009 -0.500028 3.18033e-05 1 43.1458 99.6641)" fill="#D6D6D6"/>

|

||||

<rect width="24.8171" height="24.8171" transform="matrix(-0.866041 0.499972 0.866041 0.499973 43.1458 74.8477)" fill="white"/>

|

||||

<rect width="24.8171" height="24.8171" transform="matrix(0.86601 -0.500028 3.18907e-05 -1 64.6334 112.082)" fill="#AEAEAE"/>

|

||||

<rect width="24.8171" height="24.8171" transform="matrix(0.86601 0.500028 -3.18907e-05 -1 86.1266 99.6719)" fill="#D6D6D6"/>

|

||||

<rect width="24.8171" height="24.8171" transform="matrix(0.866041 -0.499972 -0.866041 -0.499972 86.1266 124.488)" fill="white"/>

|

||||

<rect width="24.8171" height="24.8171" transform="matrix(-0.86601 0.500028 -3.19781e-05 1 129.114 50.0273)" fill="#AEAEAE"/>

|

||||

<rect width="24.8171" height="24.8171" transform="matrix(-0.866009 -0.500028 3.18033e-05 1 107.62 62.4375)" fill="#D6D6D6"/>

|

||||

<rect width="24.8171" height="24.8171" transform="matrix(-0.866041 0.499972 0.866041 0.499973 107.62 37.6211)" fill="white"/>

|

||||

<rect width="24.8171" height="24.8171" transform="matrix(0.86601 -0.500028 3.18907e-05 -1 64.635 37.6289)" fill="#AEAEAE"/>

|

||||

<rect width="24.8171" height="24.8171" transform="matrix(0.86601 0.500028 -3.18907e-05 -1 86.1282 25.2188)" fill="#D6D6D6"/>

|

||||

<rect width="24.8171" height="24.8171" transform="matrix(0.866041 -0.499972 -0.866041 -0.499972 86.1282 50.0352)" fill="white"/>

|

||||

<path d="M189.374 82.9414L174.851 65.159V82.9414H166.846V43.031H174.851V60.9278L189.374 43.031H199.037L182.57 62.8147L199.495 82.9414H189.374ZM202.702 66.9887C202.702 63.7867 203.331 60.9468 204.589 58.4691C205.885 55.9914 207.619 54.0855 209.792 52.7513C212.003 51.4171 214.461 50.7501 217.168 50.7501C219.531 50.7501 221.59 51.2265 223.343 52.1795C225.135 53.1325 226.564 54.3332 227.631 55.7817V51.2647H235.694V82.9414H227.631V78.31C226.602 79.7966 225.173 81.0355 223.343 82.0266C221.551 82.9795 219.474 83.456 217.111 83.456C214.442 83.456 212.003 82.7699 209.792 81.3976C207.619 80.0253 205.885 78.1003 204.589 75.6226C203.331 73.1068 202.702 70.2288 202.702 66.9887ZM227.631 67.103C227.631 65.159 227.25 63.5008 226.488 62.1285C225.725 60.7181 224.696 59.6508 223.4 58.9265C222.104 58.1642 220.713 57.783 219.226 57.783C217.74 57.783 216.367 58.1451 215.109 58.8694C213.851 59.5936 212.822 60.661 212.022 62.0714C211.259 63.4436 210.878 65.0827 210.878 66.9887C210.878 68.8946 211.259 70.5718 212.022 72.0204C212.822 73.4308 213.851 74.5171 215.109 75.2795C216.405 76.0419 217.778 76.4231 219.226 76.4231C220.713 76.4231 222.104 76.061 223.4 75.3367C224.696 74.5743 225.725 73.507 226.488 72.1347C227.25 70.7243 227.631 69.0471 227.631 67.103ZM247.571 47.4909C246.161 47.4909 244.979 47.0525 244.026 46.1758C243.112 45.261 242.654 44.1364 242.654 42.8023C242.654 41.4681 243.112 40.3627 244.026 39.4859C244.979 38.5711 246.161 38.1137 247.571 38.1137C248.982 38.1137 250.144 38.5711 251.059 39.4859C252.012 40.3627 252.489 41.4681 252.489 42.8023C252.489 44.1364 252.012 45.261 251.059 46.1758C250.144 47.0525 248.982 47.4909 247.571 47.4909ZM251.517 51.2647V82.9414H243.512V51.2647H251.517ZM267.431 56.182C268.46 54.5048 269.794 53.1897 271.433 52.2367C273.11 51.2837 275.016 50.8072 277.151 50.8072V59.2124H275.035C272.519 59.2124 270.614 59.8033 269.317 60.985C268.06 62.1666 267.431 64.2251 267.431 67.1602V82.9414H259.426V51.2647H267.431V56.182ZM296.608 83.456C293.559 83.456 290.814 82.7889 288.375 81.4548C285.935 80.0825 284.01 78.1575 282.6 75.6798C281.228 73.202 280.541 70.3431 280.541 67.103C280.541 63.8629 281.247 61.004 282.657 58.5263C284.106 56.0486 286.069 54.1426 288.546 52.8085C291.024 51.4362 293.788 50.7501 296.837 50.7501C299.887 50.7501 302.65 51.4362 305.128 52.8085C307.606 54.1426 309.55 56.0486 310.96 58.5263C312.409 61.004 313.133 63.8629 313.133 67.103C313.133 70.3431 312.39 73.202 310.903 75.6798C309.455 78.1575 307.472 80.0825 304.957 81.4548C302.479 82.7889 299.696 83.456 296.608 83.456ZM296.608 76.4803C298.057 76.4803 299.41 76.1372 300.668 75.4511C301.964 74.7268 302.993 73.6595 303.756 72.2491C304.518 70.8387 304.899 69.1233 304.899 67.103C304.899 64.0916 304.099 61.7855 302.498 60.1845C300.935 58.5454 299.01 57.7258 296.723 57.7258C294.436 57.7258 292.511 58.5454 290.948 60.1845C289.423 61.7855 288.661 64.0916 288.661 67.103C288.661 70.1144 289.404 72.4397 290.891 74.0788C292.415 75.6798 294.321 76.4803 296.608 76.4803ZM331.127 83.456C328.535 83.456 326.209 82.9986 324.151 82.0837C322.093 81.1308 320.453 79.8538 319.234 78.2528C318.052 76.6518 317.404 74.8793 317.29 72.9352H325.352C325.504 74.155 326.095 75.1652 327.124 75.9657C328.192 76.7662 329.507 77.1664 331.07 77.1664C332.594 77.1664 333.776 76.8615 334.615 76.2516C335.491 75.6417 335.93 74.8602 335.93 73.9072C335.93 72.878 335.396 72.1157 334.329 71.6201C333.299 71.0865 331.641 70.5147 329.354 69.9048C326.991 69.333 325.047 68.7421 323.522 68.1322C322.035 67.5223 320.739 66.5884 319.634 65.3305C318.567 64.0726 318.033 62.3763 318.033 60.2416C318.033 58.4882 318.528 56.8872 319.52 55.4387C320.549 53.9902 321.997 52.8466 323.865 52.008C325.771 51.1694 328.001 50.7501 330.555 50.7501C334.329 50.7501 337.34 51.703 339.589 53.609C341.838 55.4768 343.077 58.0117 343.306 61.2137H335.644C335.529 59.9558 334.996 58.9647 334.043 58.2404C333.128 57.478 331.889 57.0968 330.326 57.0968C328.878 57.0968 327.753 57.3637 326.953 57.8973C326.19 58.431 325.809 59.1743 325.809 60.1273C325.809 61.1946 326.343 62.0142 327.41 62.586C328.477 63.1196 330.136 63.6723 332.385 64.2441C334.672 64.8159 336.559 65.4067 338.045 66.0166C339.532 66.6266 340.809 67.5795 341.876 68.8756C342.982 70.1335 343.553 71.8107 343.592 73.9072C343.592 75.7369 343.077 77.3761 342.048 78.8246C341.057 80.2731 339.608 81.4167 337.702 82.2553C335.834 83.0558 333.643 83.456 331.127 83.456Z" fill="white"/>

|

||||

</svg>

|

||||

|

Before Width: | Height: | Size: 6.6 KiB |

@ -1,376 +0,0 @@

|

||||

.wrapper:where(.astro-7XAARZHW) {

|

||||

display: flex;

|

||||

align-items: center;

|

||||

justify-content: center;

|

||||

}

|

||||

body {

|

||||

margin: 0;

|

||||

}

|

||||

:where(.astro-7XAARZHW) {

|

||||

font-family: Noto Sans;

|

||||

box-sizing: border-box;

|

||||

}

|

||||

.hero:where(.astro-7XAARZHW) {

|

||||

position: relative;

|

||||

overflow: hidden;

|

||||

}

|

||||

@media screen and (min-width: 1024px) {

|

||||

.hero:where(.astro-7XAARZHW) {

|

||||

height: 45vw;

|

||||

}

|

||||

}

|

||||

.hero:where(.astro-7XAARZHW) {

|

||||

display: block;

|

||||

width: 100%;

|

||||

height: auto;

|

||||

}

|

||||

.hero-logo:where(.astro-7XAARZHW) {

|

||||

margin-bottom: 58px;

|

||||

}

|

||||

@media screen and (min-width: 1024px) {

|

||||

.hero-logo:where(.astro-7XAARZHW) {

|

||||

margin-right: 260px;

|

||||

margin-bottom: 0;

|

||||

}

|

||||

}

|

||||

.overlay:where(.astro-7XAARZHW) {

|

||||

width: 100%;

|

||||

height: 100%;

|

||||

min-height: 708px;

|

||||

display: flex;

|

||||

flex-direction: row;

|

||||

align-items: center;

|

||||

justify-content: center;

|

||||

padding: 0 24px;

|

||||

background-color: #03153a;

|

||||

}

|

||||

.constraint:where(.astro-7XAARZHW) {

|

||||

width: 100%;

|

||||

max-width: 1300px;

|

||||

display: flex;

|

||||

flex-direction: row;

|

||||

align-items: center;

|

||||

justify-content: center;

|

||||

}

|

||||

.constraint:where(.astro-7XAARZHW).vertical {

|

||||

flex-direction: column;

|

||||

}

|

||||

.constraint:where(.astro-7XAARZHW).vertical>.header:where(.astro-7XAARZHW) {

|

||||

display: flex;

|

||||

flex-direction: column;

|

||||

align-items: center;

|

||||

width: 100%;

|

||||

max-width: 1200px;

|

||||

margin-top: 60px;

|

||||

}

|

||||

@media screen and (min-width: 1024px) {

|

||||

.constraint:where(.astro-7XAARZHW).vertical>.header:where(.astro-7XAARZHW) {

|

||||

flex-direction: row;

|

||||

margin-top: 0;

|

||||

}

|

||||

}

|

||||

.title:where(.astro-7XAARZHW) {

|

||||

font-weight: 700;

|

||||

font-size: 58px;

|

||||

line-height: 80px;

|

||||

letter-spacing: .02em;

|

||||

color: #fff;

|

||||

margin-top: 0;

|

||||

}

|

||||

.subtitle:where(.astro-7XAARZHW) {

|

||||

font-weight: 500;

|

||||

font-size: 31px;

|

||||

line-height: 130%;

|

||||

letter-spacing: .01em;

|

||||

color: #fff;

|

||||

}

|

||||

.title:where(.astro-7XAARZHW)>span:where(.astro-7XAARZHW) {

|

||||

color: #e06948;

|

||||

}

|

||||

.grid:where(.astro-7XAARZHW) {

|

||||

display: grid;

|

||||

grid-gap: 2rem;

|

||||

}

|

||||

.sectionTitle:where(.astro-7XAARZHW) {

|

||||

font-weight: 700;

|

||||

font-size: var(--f-u8);

|

||||

margin-top: 4rem;

|

||||

margin-bottom: 2rem;

|

||||

}

|

||||

.roles:where(.astro-7XAARZHW) {

|

||||

display: flex;

|

||||

flex-wrap: wrap;

|

||||

gap: .5em;

|

||||

font-size: var(--f-d1);

|

||||

}

|

||||

.role:where(.astro-7XAARZHW) {

|

||||

position: relative;

|

||||

display: inline-block;

|

||||

white-space: nowrap;

|

||||

font-weight: 900;

|

||||

color: var(--t-bg);

|

||||

background-color: var(--t-fg);

|

||||

padding: .25em .5em;

|

||||

z-index: 2;

|

||||

}

|

||||

.invert:where(.astro-7XAARZHW) {

|

||||

position: absolute;

|

||||

color: var(--t-fg);

|

||||

display: flex;

|

||||

align-items: center;

|

||||

justify-content: center;

|

||||

width: 100%;

|

||||

height: 100%;

|

||||

top: 0;

|

||||

left: 0;

|

||||

pointer-events: none;

|

||||

clip-path: polygon(0% 100%, 100% 100%, 100% 200%, 0% 200%);

|

||||

transition: clip-path cubic-bezier(.4, 0, .5, 1) .15s;

|

||||

}

|

||||

.desc:where(.astro-7XAARZHW) {

|

||||

font-weight: 400;

|

||||

font-size: 24px;

|

||||

line-height: 170%;

|

||||

color: #fff;

|

||||

max-width: 748px;

|

||||

margin: 64px 0;

|

||||

max-width: 600px;

|

||||

}

|

||||

@media screen and (min-width: 1024px) {

|

||||

.desc:where(.astro-7XAARZHW) {

|

||||

max-width: 1199px;

|

||||

font-size: 18px;

|

||||

}

|

||||

}

|

||||

.desc:where(.astro-7XAARZHW) span:where(.astro-7XAARZHW) {

|

||||

color: #ee5007;

|

||||

font-weight: 700;

|

||||

}

|

||||

.bio:where(.astro-7XAARZHW) {

|

||||

line-height: 2;

|

||||

margin-bottom: 2rem;

|

||||

}

|

||||

.bio:where(.astro-7XAARZHW)>span:where(.astro-7XAARZHW):first-of-type {

|

||||

line-height: 1;

|

||||

margin-bottom: .5em;

|

||||

display: block;

|

||||

font-weight: 700;

|

||||

font-size: var(--f-u4);

|

||||

}

|

||||

.orange-line:where(.astro-7XAARZHW) {

|

||||

height: 16px;

|

||||

width: 100%;

|

||||

background: #ee5007;

|

||||

}

|

||||

.buttons:where(.astro-7XAARZHW) {

|

||||

display: flex;

|

||||

flex-direction: column;

|

||||

align-items: center;

|

||||

}

|

||||

@media screen and (min-width: 420px) {

|

||||

.buttons:where(.astro-7XAARZHW) {

|

||||

flex-direction: row;

|

||||

align-items: flex-start;

|

||||

}

|

||||

}

|

||||

.buttons:where(.astro-7XAARZHW)>a:where(.astro-7XAARZHW) {

|

||||

display: flex;

|

||||

flex-direction: row;

|

||||

justify-content: center;

|

||||

align-items: center;

|

||||

padding: 8px 16px;

|

||||

width: 210px;

|

||||

height: 50px;

|

||||

font-weight: 600;

|

||||

font-size: 22px;

|

||||

line-height: 100%;

|

||||

color: #fff;

|

||||

text-decoration: none;

|

||||

border-radius: 4px;

|

||||

margin-top: 53px;

|

||||

}

|

||||

@media screen and (min-width: 420px) {

|

||||

.buttons:where(.astro-7XAARZHW)>a:where(.astro-7XAARZHW) {

|

||||

margin-right: 40px;

|

||||

}

|

||||

}

|

||||

#quickstart:where(.astro-7XAARZHW) {

|

||||

background: #ee5007;

|

||||

box-shadow: 0 0 2px #0003, 0 1px 2px #0000001a, inset 0 -1px #0003;

|

||||

border-radius: 4px;

|

||||

}

|

||||

#download:where(.astro-7XAARZHW) {

|

||||

border: 1px solid #8facd4;

|

||||

box-shadow: inset 0 -1px #0003;

|

||||

filter: drop-shadow(0px 0px 2px rgba(0, 0, 0, .2)) drop-shadow(0px 1px 2px rgba(0, 0, 0, .1));

|

||||

color: #8facd4;

|

||||

}

|

||||

footer:where(.astro-7XAARZHW) {

|

||||

background: #e06948;

|

||||

height: 250px;

|

||||

display: flex;

|

||||

flex-direction: row;

|

||||

justify-content: center;

|

||||

align-items: center;

|

||||

}

|

||||

.sections-title:where(.astro-7XAARZHW) {

|

||||

font-weight: 600;

|

||||

font-size: 32px;

|

||||

line-height: 50px;

|

||||

color: #0a2239;

|

||||

margin-top: 123px;

|

||||

text-align: center;

|

||||

}

|

||||

.section-wrapper {

|

||||

display: flex;

|

||||

flex-direction: column;

|

||||

align-items: center;

|

||||

justify-content: center;

|

||||

}

|

||||

.sections {

|

||||

display: flex;

|

||||

flex-direction: column;

|

||||

align-items: center;

|

||||

max-width: 1200px;

|

||||

justify-content: center;

|

||||

}

|

||||

.sections[aria-current=horizontal] {

|

||||

flex-direction: column;

|

||||

width: 100%}

|

||||

@media screen and (min-width: 1024px) {

|

||||

.sections[aria-current=horizontal] {

|

||||

flex-direction: row;

|

||||

}

|

||||

}

|

||||

.main-section {

|

||||

display: flex;

|

||||

flex-direction: column;

|

||||

align-items: center;

|

||||

justify-content: center;

|

||||

padding: 64px 48px;

|

||||

box-sizing: border-box;

|

||||

width: 100%}

|

||||

.main-section>.sections {

|

||||

padding-bottom: 0;

|

||||

}

|

||||

.main-section>.title {

|

||||

font-weight: 600;

|

||||

font-size: 42px;

|

||||

line-height: 52px;

|

||||

text-align: center;

|

||||

color: #161b33;

|

||||

margin: 43px 0;

|

||||

}

|

||||

.secondary-section {

|

||||

display: flex;

|

||||

flex-direction: column;

|

||||

border-bottom: 1px solid rgba(0, 0, 0, .1);

|

||||

padding: 32px 0;

|

||||

}

|

||||

@media screen and (min-width: 1024px) {

|

||||

.secondary-section {

|

||||

flex-direction: row;

|

||||

}

|

||||

}

|

||||

.secondary-section img {

|

||||

margin-top: 80px;

|

||||

margin-bottom: 40px;

|

||||

}

|

||||

.secondary-section:first-child {

|

||||

padding-top: 0;

|

||||

}

|

||||

.secondary-section:last-child {

|

||||

border-bottom: 0;

|

||||

padding-bottom: 0;

|

||||

}

|

||||

.secondary-section>.title {

|

||||

min-width: 0px;

|

||||

width: 100%;

|

||||

display: flex;

|

||||

flex-direction: column;

|

||||

align-items: center;

|

||||

justify-content: flex-start;

|

||||

font-family: Noto Sans Mono;

|

||||

font-weight: 700;

|

||||

font-size: 24px;

|

||||

line-height: 38px;

|

||||

color: #161b33;

|

||||

text-align: center;

|

||||

margin-bottom: 32px;

|

||||

}

|

||||

@media screen and (min-width: 1024px) {

|

||||

.secondary-section>.title {

|

||||

min-width: 480px;

|

||||

}

|

||||

}

|

||||

.sections[aria-current=horizontal] p {

|

||||

text-align: center;

|

||||

}

|

||||

.sections[aria-current=horizontal]>.secondary-section {

|

||||

flex-direction: column;

|

||||

align-items: center;

|

||||

border-bottom: 0;

|

||||

padding: 0;

|

||||

width: 100%;

|

||||

margin: 0;

|

||||

}

|

||||

@media screen and (min-width: 1024px) {

|

||||

.sections[aria-current=horizontal]>.secondary-section {

|

||||

margin: 0 24px;

|

||||

}

|

||||

}

|

||||

.sections[aria-current=horizontal]>.secondary-section .image-container {

|

||||

height: 100px;

|

||||

display: flex;

|

||||

justify-content: flex-end;

|

||||

}

|

||||

.sections[aria-current=horizontal]>.secondary-section .image-container-removeHeight {

|

||||

display: flex;

|

||||

justify-content: flex-end;

|

||||

}

|

||||

.sections[aria-current=horizontal]>.secondary-section img {

|

||||

margin: 0;

|

||||

}

|

||||

.sections[aria-current=horizontal]>.secondary-section:first-child {

|

||||

margin-left: 0;

|

||||

}

|

||||

.sections[aria-current=horizontal]>.secondary-section:last-child {

|

||||

margin-right: 0;

|

||||

}

|

||||

.sections[aria-current=horizontal]>.secondary-section>.title {

|

||||

padding: 32px 12px;

|

||||

flex-grow: 1;

|

||||

width: 100%;

|

||||

min-width: auto;

|

||||

max-width: 340px;

|

||||

}

|

||||

.sections[aria-current=horizontal]>.secondary-section>.title>div {

|

||||

margin: 32px 0;

|

||||

}

|

||||

.tertiary-section {

|

||||

margin-bottom: 24px;

|

||||

border-bottom: 1px solid rgba(0, 0, 0, .1);

|

||||

width: 100%}

|

||||

.tertiary-section:last-child {

|

||||

border-bottom: 0px;

|

||||

margin-bottom: 0;

|

||||

}

|

||||

.tertiary-section>.title {

|

||||

font-family: Noto Sans Mono;

|

||||

font-style: normal;

|

||||

font-weight: 700;

|

||||

font-size: 24px;

|

||||

line-height: 33px;

|

||||

color: #161b33;

|

||||

margin-bottom: 16px;

|

||||

}

|

||||

.sections p {

|

||||

font-family: Noto Sans;

|

||||

font-weight: 400;

|

||||

font-size: 16px;

|

||||

line-height: 160%;

|

||||

letter-spacing: .01em;

|

||||

color: #344079;

|

||||

margin-top: 0;

|

||||

margin-bottom: 24px;

|

||||

}

|

||||

@ -1,29 +0,0 @@

|

||||

/*

|

||||

|

||||

Add styles or override variables from the theme here.

|

||||

|

||||

*/

|

||||

|

||||

$primary: #ee5007;

|

||||

$secondary: #e1e5ee;

|

||||

$enable-gradients: true;

|

||||

$enable-rounded: false;

|

||||

$enable-shadows: true;

|

||||

$google_font_name: "Noto Sans";

|

||||

$google_font_family: "Noto+Sans:0,300;0,400;0,500;0,600;0,700;1,300;1,400;1,500;1,600;1,700";

|

||||

$td-enable-google-fonts: false;

|

||||

|

||||

|

||||

a {

|

||||

color: #009ffd

|

||||

}

|

||||

|

||||

a:hover {

|

||||

color: #03153a

|

||||

}

|

||||

|

||||

// Disables text "Kairos" in the menu. Logo contains it already

|

||||

.td-navbar .navbar-brand__name {

|

||||

display: none;

|

||||

}

|

||||

|

||||

172

docs/config.toml

172

docs/config.toml

@ -1,172 +0,0 @@

|

||||

baseURL = "https://kairos.io"

|

||||

title = "Kairos"

|

||||

|

||||

enableRobotsTXT = true

|

||||

|

||||

# Hugo allows theme composition (and inheritance). The precedence is from left to right.

|

||||

theme = ["docsy"]

|

||||

|

||||

# Will give values to .Lastmod etc.

|

||||

enableGitInfo = true

|

||||

# Language settings

|

||||

contentDir = "content/en"

|

||||

defaultContentLanguage = "en"

|

||||

defaultContentLanguageInSubdir = false

|

||||

# Useful when translating.

|

||||

enableMissingTranslationPlaceholders = true

|

||||

enableEmoji = true

|

||||

|

||||

disableKinds = ["taxonomy", "taxonomyTerm"]

|

||||

|

||||

# Highlighting config

|

||||

pygmentsCodeFences = true

|

||||

pygmentsUseClasses = false

|

||||

# Use the new Chroma Go highlighter in Hugo.

|

||||

pygmentsUseClassic = false

|

||||

#pygmentsOptions = "linenos=table"

|

||||

# See https://help.farbox.com/pygments.html

|

||||

pygmentsStyle = "tango"

|

||||

|

||||

# Configure how URLs look like per section.

|

||||

[permalinks]

|

||||

blog = "/:section/:year/:month/:day/:slug/"

|

||||

|

||||

## Configuration for BlackFriday markdown parser: https://github.com/russross/blackfriday

|

||||

[blackfriday]

|

||||

plainIDAnchors = true

|

||||

hrefTargetBlank = true

|

||||

angledQuotes = false

|

||||

latexDashes = true

|

||||

|

||||

# Image processing configuration.

|

||||

[imaging]

|

||||

resampleFilter = "CatmullRom"

|

||||

quality = 75

|

||||

anchor = "smart"

|

||||

|

||||

[services]

|

||||

[services.googleAnalytics]

|

||||

# Comment out the next line to disable GA tracking. Also disables the feature described in [params.ui.feedback].

|

||||

id = "UA-00000000-0"

|

||||

|

||||

# Language configuration

|

||||

|

||||

[languages]

|

||||

[languages.en]

|

||||

title = "Kairos - The immutable Linux meta-distribution for edge Kubernetes"

|

||||

description = "The immutable edge Kubernetes"

|

||||

languageName ="English"

|

||||

# Weight used for sorting.

|

||||

weight = 1

|

||||

|

||||

[[menu.main]]

|

||||

name = "Contribute"

|

||||

weight = 50

|

||||

url = "https://github.com/kairos-io/kairos/contribute"

|

||||

pre = "<i class='fab fa-github'></i>"

|

||||

post = ""

|

||||

[[menu.main]]

|

||||

name = "Contribution guidelines"

|

||||

weight = 50

|

||||

url = "https://github.com/kairos-io/kairos/blob/master/CONTRIBUTING.md"

|

||||

pre = "<i class='fab fa-github'></i>"

|

||||

post = ""

|

||||

[markup]

|

||||

[markup.goldmark]

|

||||

[markup.goldmark.renderer]

|

||||

unsafe = true

|

||||

[markup.highlight]

|

||||

# See a complete list of available styles at https://xyproto.github.io/splash/docs/all.html

|

||||

style = "tango"

|

||||

# Everything below this are Site Params

|

||||

|

||||

[params]

|

||||

copyright = "Kairos authors"

|

||||

privacy_policy = "https://policies.google.com/privacy"

|

||||

|

||||

prism_syntax_highlighting = true

|

||||

|

||||

# First one is picked as the Twitter card image if not set on page.

|

||||

# images = ["images/project-illustration.png"]

|

||||

|

||||

# Menu title if your navbar has a versions selector to access old versions of your site.

|

||||

# This menu appears only if you have at least one [params.versions] set.

|

||||

version_menu = "Releases"

|

||||

|

||||

# Repository configuration (URLs for in-page links to opening issues and suggesting changes)

|

||||

github_repo = "https://github.com/kairos-io/kairos"

|

||||

# An optional link to a related project repo. For example, the sibling repository where your product code lives.

|

||||

github_project_repo = "https://github.com/kairos-io/kairos"

|

||||

|

||||

# Specify a value here if your content directory is not in your repo's root directory

|

||||

github_subdir = "docs"

|

||||

github_branch = "master"

|

||||

# Google Custom Search Engine ID. Remove or comment out to disable search.

|

||||

#gcs_engine_id = "011737558837375720776:fsdu1nryfng"

|

||||

|

||||

# Enable Algolia DocSearch

|

||||

algolia_docsearch = false

|

||||

|

||||

# Enable Lunr.js offline search

|

||||

offlineSearch = true

|

||||

|

||||

[params.softwareVersions]

|

||||

k3s = "k3sv1.26.3+k3s1"

|

||||

kairos = "v2.0.0"

|

||||

flavor = "debian"

|

||||

armFlavor = "alpine"

|

||||

registryURL = "quay.io/kairos"

|

||||

# User interface configuration

|

||||

[params.ui]

|

||||

# Enable to show the side bar menu in its compact state.

|

||||

sidebar_menu_compact = false

|

||||

# Set to true to disable breadcrumb navigation.

|

||||

breadcrumb_disable = true

|

||||

# Set to true to hide the sidebar search box (the top nav search box will still be displayed if search is enabled)

|

||||

sidebar_search_disable = true

|

||||

# Set to false if you don't want to display a logo (/assets/icons/logo.svg) in the top nav bar

|

||||

navbar_logo = true

|

||||

# Set to true to disable the About link in the site footer

|

||||

footer_about_disable = false

|

||||

|

||||

# Adds a H2 section titled "Feedback" to the bottom of each doc. The responses are sent to Google Analytics as events.

|

||||

# This feature depends on [services.googleAnalytics] and will be disabled if "services.googleAnalytics.id" is not set.

|

||||

# If you want this feature, but occasionally need to remove the "Feedback" section from a single page,

|

||||

# add "hide_feedback: true" to the page's front matter.

|

||||

[params.ui.feedback]

|

||||

enable = true

|

||||

# The responses that the user sees after clicking "yes" (the page was helpful) or "no" (the page was not helpful).

|

||||

yes = 'Awesome! Glad to hear it! Please <a href="https://github.com/kairos-io/kairos/issues/new">tell us how we can improve</a>.'

|

||||

no = 'Oh snap! Sorry to hear that. Please <a href="https://github.com/kairos-io/kairos/issues/new">tell us how we can improve</a>.'

|

||||

|

||||

[params.links]

|

||||

[[params.links.developer]]

|

||||

name = "GitHub"

|

||||

url = "https://github.com/kairos-io/kairos"

|

||||

icon = "fab fa-github"

|

||||

desc = "Development takes place here!"

|

||||

[[params.links.user]]

|

||||

name = "Matrix"

|

||||

url = "https://matrix.to/#/#kairos-io:matrix.org"

|

||||

icon = "fa fa-message"

|

||||

desc = "Join us on Matrix!"

|

||||

[[params.links.user]]

|

||||

name = "GitHub discussions"

|

||||

url = "https://github.com/kairos-io/kairos/discussions"

|

||||

icon = "fa fa-comments"

|

||||

desc = "Questions?"

|

||||

[[params.links.user]]

|

||||

name = "Office hours calendar"

|

||||

url = "https://calendar.google.com/calendar/embed?src=c_6d65f26502a5a67c9570bb4c16b622e38d609430bce6ce7fc1d8064f2df09c11%40group.calendar.google.com&ctz=Europe%2FRome"

|

||||

icon = "fa fa-calendar"

|

||||

desc = "Join us in our Office hours!"

|

||||

[[params.links.user]]

|

||||

name = "Slack"

|

||||

url = "https://join.slack.com/t/spectrocloudcommunity/shared_invite/zt-1k7wsz840-ugSsPKzZCP5gkasJ0kNpqw"

|

||||

icon = "fab fa-slack"

|

||||

desc = "Join us on Slack!"

|

||||

[[params.links.user]]

|

||||

name = "Newsletter"

|

||||

url = "https://kairoslinux.substack.com/"

|

||||

icon = "fa fa-envelope"

|

||||

desc = "Subscribe to the newsletter!"

|

||||

@ -1,31 +0,0 @@

|

||||

---

|

||||

title: "Kairos at FOSDEM 2023"

|

||||

date: 2023-02-07T10:53:13+01:00

|

||||

author: Mauro Morales ([Twitter](https://twitter.com/mauromrls)) ([GitHub](https://github.com/mauromorales))

|

||||

---

|

||||

|

||||

I recently had the opportunity to attend FOSDEM 2023 and share a bit about the Kairos project. In this post I want to summarize what I presented and share other interesting presentations I attended, which I believe are relevant for Kairos and our community.

|

||||

|

||||

## How we build and maintain Kairos

|

||||

|

||||

I had the opportunity to share about [How we build and maintain Kairos](https://fosdem.org/2023/schedule/event/kairos/). In first half of the presentation, I introduce the different elements that make Kairos a great OS for Edge Kubernetes. During the second half of the presentation you will get an overview of how the Kairos Factory works, starting from those different Linux distributions all the way up to producing Kairos core and standard images. Because my presentation took place in the Distributions Devroom, I put some extra emphasis on the challenges we have to be distribution agnostic.

|

||||

|

||||

The talk is intended to newcomers, so I made an effort to describe things in a simple and welcoming language. However, I think it can also be interesting for those who might already know about Kairos but wonder how to extend the core and standard images, or simply have a better understanding of how all the pieces interconnect.

|

||||

|

||||

Like I mentioned, the presentation took place in the Distributions Devroom and we're very thankful to them for hosting us. While it was a great experience and the talk seemed to have a good reception, I now realize that the topic is probably more relevant for a different devroom, for example, the [

|

||||

Image-based Linux and Secure Measured Boot devroom

|

||||

](https://fosdem.org/2023/schedule/track/image_based_linux_and_secure_measured_boot/), which I'll make sure to send proposals next year.

|

||||

|

||||

## Other talks which are relevant to Kairos

|

||||

|

||||

There were other interesting presentations I had the opportunity to attend, which I think are also relevant to Kairos and our community. These would be my top picks:

|

||||

|

||||

If you're completely new to the concepts of Image-Based Linux, Unified Kernel Image or Discoverable Disk Image, I'd recommend checking Luca Bocassi's talk [Introducing and decoding image-based Linux terminology and concepts](https://fosdem.org/2023/schedule/event/image_linux_secureboot_uki_ddi_ohmy/). As someone who very recently joined the Kairos project, I still get a bit lost with all the different technologies used in Image-Based Linux. The presenter made a good job clarifying some of these technologies and how they work together.

|

||||

|

||||

One of the key presentations in my opinion was Lennart Poettering's, [Measured Boot, Protecting Secrets and you](https://fosdem.org/2023/schedule/event/image_linux_secureboot_tpm/), where he talks about Trusted Plataform Modules and upcoming functionality in systemd. I'm pretty sure there will be some of these features which will be relevant for Kairos sooner rather than later.

|

||||

|

||||

Last but not least, there was an interesting talk by Gabriel Kerneis about [User-friendly Lightweight TPM Remote Attestation over Bluetooth](https://fosdem.org/2023/schedule/event/image_linux_secureboot_ultrablue/). My guess is that we will continue seeing different methods to do and simplify attestation and because one of our goals at the Kairos project is to be as friendly as we can to our user base, then I can only imagine we will end up introducing some sort of remote attestation technologies like Ultrablue in the future.

|

||||

|

||||

## Conclusion

|

||||

|

||||

FOSDEM is a very important conference when it comes to free and open source software and I'm very happy that Kairos was present. First of all because I think the work we're doing with Kairos is helping solve some of the most challenging issues of running cloud native applications on the edge, but also because as an open source project, it was nice to introduce ourselves to the community there and start a conversation. Expect us to keep engaging with you in further editions of FOSDEM and other conferences!

|

||||

@ -1,22 +0,0 @@

|

||||

---

|

||||

title: "Kairos at the KCD Amsterdam and Paris 2023"

|

||||

date: 2023-03-09T10:53:13+01:00

|

||||

author: Mauro Morales ([Twitter](https://twitter.com/mauromrls)) ([GitHub](https://github.com/mauromorales))

|

||||

---

|

||||

|

||||

We recently had the opportunity to sponsor two Kubernetes events, [KCD Amsterdam][amsterdam] and [KCD Paris][paris]. This blog post, is a summary about my personal experience attending them.

|

||||

|

||||

Let me start by saying, that I'm fairly new to Kubernetes and its community :wave:. I know this project is big and that there are many companies building products and services around it, or have an interest in adopting it. So, I was very curious to see what kind of people I was going to meet and understand how Kairos could help them.

|

||||

|

||||

Most attendees that approached us at the Kairos booths, were hearing about Kairos for the first time, and genuinely wanted to know what the project was about. I feel confident to say this, because we didn't bring fancy prizes to give away and yet most of them would happily stay with us for 5, 10 and up to 15 minutes hearing about our features and engaging in conversation.

|

||||

|

||||

_If you're reading this and would like to know about those cool features I'd recommend going checking out the [Getting Started](/docs/getting-started/), [Web UI](/docs/installation/webui/), [P2P Network](/docs/architecture/network/) and [AuroraBoot](/docs/reference/auroraboot/)_

|

||||

|

||||

When you're in the trenches building a product, talking to users or potential users is super valuable because it lets you see first hand, what kind of issues they are trying to solve. I don't like building projects just because they are cool. To me, it's important that they make people's life easier. Some of the folks who reached to us, had clear problems in mind, and they didn't shy to make hard questions about the internals of Kairos, our project's governance and beyond. I'm very pleased to say that some of them left the booth with a smile on their face, because they might have found a good fit.

|

||||

|

||||

While I didn't get to attend any of the talks, I saw some really interesting topics, some of them from fantastic organizations like CERN! However, what I did do a bit, was to speak to some of the folks in the other booths, just to see what they were up to :mag: and most importantly to see if there were chances our different projects could leverage each other out :raised_hands:.

|

||||

|

||||

Last but not least, let me thank everyone :bow: who attended our booth for your valuable time and feedback. I think every one of my colleagues will agree that we're committed to building a great product, that solves real world problems and we plan to use that feedback accordingly. We have a passion for open-source and we understand that this means much more than just great engineering and best practices. It also means being there for you, the community.

|

||||

|

||||

[amsterdam]: https://community.cncf.io/events/details/cncf-kcd-netherlands-presents-kubernetes-community-days-amsterdam-2023/

|

||||

[paris]: https://community.cncf.io/events/details/cncf-kcd-france-presents-kubernetes-community-days-france-2023/

|

||||

@ -1,4 +0,0 @@

|

||||

---

|

||||

title: Blog

|

||||

menu: {main: {weight: 50}}

|

||||

---

|

||||

@ -1,296 +0,0 @@

|

||||

---

|

||||

title: "Access your home-lab Kairos cluster over a Wireguard VPN"

|

||||

date: 2023-03-29T10:53:13+01:00

|

||||

author: Dimitris Karakasilis([Personal page](https://dimitris.karakasilis.me)) ([GitHub](https://github.com/jimmykarily)) ([Codeberg](https://codeberg.org/dkarakasilis/))

|

||||

---

|

||||

|

||||

## The problem

|

||||

|

||||

You got yourself a Rabserry Pi (or more), and you want to put them to good use.

|

||||

You decide to make a Kubernetes cluster out of them, so that you can utilise the resources better, use familiar tools and implement infrastructure-as-code.

|

||||

|

||||

Up to this point, kudos to you for demanding no less than a real cloud from your home infra.

|

||||

|

||||

Like a smart person you are, you probably used [Kairos](https://kairos.io/) to create your cluster and it's now up and running.

|

||||

It's now time to run some workloads.

|

||||

|

||||

Here is my list if you need some ideas:

|

||||

|

||||

- A self-hosted Dropbox alternative (e.g. [Seafile](https://www.seafile.com/en/home/), [NextCloud](https://nextcloud.com/) or other)

|

||||

- [Pihole](https://pi-hole.net/)

|

||||

- An [mqtt](https://mqtt.org/) broker for your IoT projects

|

||||

- Your own [Gitea](https://gitea.io/en-us/) instance

|

||||

- Your own ChatGPT alternative (e.g. using [lama-cli](https://github.com/go-skynet/llama-cli) or [serge](https://github.com/nsarrazin/serge))

|

||||

|

||||

None of these workloads is intended for public access. There are ways to expose the cluster to the world (e.g. like I described [in another post](https://dimitris.karakasilis.me/2022/12/26/self-hosted-ci.html))

|

||||

but it would be better if only devices within a VPN would have access to it.

|

||||

|

||||

Once again, there are many VPN solutions out there, but for this blog post, we'll go with [Wireguard](https://www.wireguard.com/).

|

||||

|

||||

So here is the problem in one sentence:

|

||||

|

||||

> "How do we expose our (possibly behind NAT) cluster, to machines inside the same Wireguard VPN?"

|

||||

|

||||

_"NAT" is the main part of the problem because otherwise this would simply be a blog post on how to create a Wireguard VPN. There are many nice tutorials already out there for that._

|

||||

|

||||

## A Solution

|

||||

|

||||

While trying to solve the problem, I learned 2 things about Wireguard that I didn't know:

|

||||

|

||||

1. Wireguard doesn't distinguish between a "server" and a "client". All peers are made equal.

|

||||

2. Wireguard doesn't provide a solution for NAT traversal. How you access nodes behind NAT, is up to you.

|

||||

|

||||

So imagine you have your cluster behind your home router (NAT) and your mobile phone on another network (behind NAT too) trying to access a service on the cluster.

|

||||

That's not possible, unless there is some public IP address that somehow forwards requests to the cluster.

|

||||

|

||||

And that's the idea this solution is based on.

|

||||

|

||||

### High level view

|

||||

|

||||

|

||||

|

||||

The idea is almost similar to the one I described [in another post](https://dimitris.karakasilis.me/2022/12/26/self-hosted-ci.html).

|

||||

The only difference is, that this time we expose the cluster only to machines inside the VPN.

|

||||

|

||||

Prerequisites:

|

||||

|

||||

- A VM with a public IP address and SSH access (as small as it gets, it's good enough)

|

||||

- `kubectl` access to the cluster we want to expose (it doesn't have to be Kairos, even [`k3d`](https://k3d.io) and [`kind`](https://kind.sigs.k8s.io/) will do)

|

||||

- A machine to test the result (a smartphone where Wireguard can be installed is fine)

|

||||

|

||||

### Step by step

|

||||

|

||||

From this point on, we will use the IP address `1.2.3.4` as the public IP address of the VM in the cloud.

|

||||

Replace it with the one matching your VM. We also assume, that the user with SSH access is `root`. Replace if necessary.

|

||||

|

||||

#### Setup the cloud VM

|

||||

|

||||

SSH to the machine:

|

||||

|

||||

```bash

|

||||

$ ssh root@1.2.3.4

|

||||

```

|

||||

|

||||

Create Wireguard keys:

|

||||

|

||||

```bash

|

||||

$ wg genkey | tee privatekey | wg pubkey > publickey

|

||||

```

|

||||

|

||||

Create Wireguard config:

|

||||

|

||||

```bash

|

||||

$ cat << EOF > /etc/wireguard/wg0.conf

|

||||

[Interface]

|

||||

Address = 192.168.6.1/24

|

||||

PrivateKey = $(cat privatekey)

|

||||

ListenPort = 41194

|

||||

|

||||

# Mobile client

|

||||

[Peer]

|

||||

PublicKey = <public key from next step>

|

||||

AllowedIPs = 192.168.6.2/32

|

||||

EOF

|

||||

```

|

||||

|

||||

Start and enable the Wireguard service:

|

||||

|

||||

```

|

||||

$ sudo systemctl enable --now wg-quick@wg0

|

||||

```

|

||||

|

||||

Allow binding non-loopback interfaces when creating an SSH reverse tunnel

|

||||

by setting `GatewayPorts clientspecified` in `/etc/ssh/sshd_config`.

|

||||

|

||||

#### Setup the test machine (mobile?)

|

||||

|

||||

On some computer with `wg` installed, generate the keys:

|

||||

|

||||

```bash

|

||||

$ wg genkey | tee privatekey | wg pubkey > publickey

|

||||

```

|

||||

|

||||

Create the Wireguard configuration. Follow the instructions for your favorite application.

|

||||

For Android, you can use this: https://play.google.com/store/apps/details?id=com.wireguard.android

|

||||

|

||||

If setting up a Linux machine, you can create the configuration like this:

|

||||

|

||||

```bash

|

||||

$ cat << EOF > /etc/wireguard/wg0.conf

|

||||

[Interface]

|

||||

Address = 192.168.6.2/24

|

||||

PrivateKey = $(cat privatekey)

|

||||

|

||||

# The cloud VM

|

||||

[Peer]

|

||||

PublicKey = <public key from the previous step>

|

||||

AllowedIPs = 192.168.6.1/32

|

||||

Endpoint = 1.2.3.4:41194

|

||||

EOF

|

||||

```

|

||||

|

||||

Start and enable the Wireguard service. If on a Linux machine, something like this will do:

|

||||

|

||||

```

|

||||

$ sudo systemctl enable --now wg-quick@wg0

|

||||

```

|

||||

|

||||

On a mobile, follow the instructions of your application.

|

||||

|

||||

After a while, your client should be able to ping the IP address of the VM: `192.168.6.1`.

|

||||

You may find the output of `wg show` useful, while waiting for the peers to connect.

|

||||

|

||||

#### Setup the cluster

|

||||

|

||||

Deploy the helper Pod. We will use an image created [with this Dockerfile](https://codeberg.org/dkarakasilis/self-hosted-ci/src/branch/main/image) and

|

||||

published [here](https://quay.io/repository/jimmykarily/nginx-ssh-reverse-proxy). The image's entrypoint works with a config

|

||||

described [here](https://codeberg.org/dkarakasilis/self-hosted-ci/src/commit/20d7c6cbf70cd5318309362b0897e6aeb9842b82/image/start.sh#L5-L27).

|

||||

The image is not multiarch, but there is one suitable for RasberryPi 4 (see the comment in the file).

|

||||

|

||||

If you are are going to create a fresh Kairos cluster, you can use a config like the following to automatically set up the helper Pod (make sure you replace the `id_rsa` and `id_rsa.pub` keys).

|

||||

If you prefer to not have the keys stored on your Kairos host filesystem, you can simply create the same resources using `kubectl apply -f` after your cluster is up an running.

|

||||

|

||||

```

|

||||

#cloud-config

|

||||

|

||||

users:

|

||||

- name: kairos

|

||||

passwd: kairos

|

||||

|

||||

stages:

|

||||

after-install-chroot:

|

||||

- files:

|

||||

- path: /var/lib/rancher/k3s/server/manifests/rproxy-pod.yaml

|

||||

content: |

|

||||

---

|

||||

apiVersion: v1

|

||||

data:

|

||||

id_rsa: the_vms_private_key_in_base64

|

||||

id_rsa.pub: the_vms_public_key_in_base64

|

||||

kind: Secret

|

||||

metadata:

|

||||

name: jumpbox-ssh-key

|

||||

type: Opaque

|

||||

|

||||

---

|

||||

apiVersion: v1

|

||||

kind: ConfigMap

|

||||

metadata:

|

||||

name: proxy-config

|

||||

data:

|

||||

config.json: |

|

||||

{

|

||||

"services": [

|

||||

{

|

||||

"bindIP": "192.168.6.1",

|

||||

"bindPort": "443",

|

||||

"proxyAddress": "traefik.kube-system.svc",

|

||||

"proxyPort": "443"

|

||||

},

|

||||

{

|

||||

"bindIP": "192.168.6.1",

|

||||

"bindPort": "80",

|

||||

"proxyAddress": "traefik.kube-system.svc",

|

||||

"proxyPort": "80"

|

||||

}

|

||||

],

|

||||

"jumpbox": {

|

||||

"url": "1.2.3.4",

|

||||

"user": "root",

|

||||

"sshKeyFile": "/ssh/id_rsa"

|

||||

}

|

||||

}

|

||||

|

||||

---

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

metadata:

|

||||

annotations:

|

||||

name: nginx-ssh-reverse-proxy

|

||||

spec:

|

||||

replicas: 1

|

||||

selector:

|

||||

matchLabels:

|

||||

app.kubernetes.io/instance: nginx-ssh-reverse-proxy

|

||||

app.kubernetes.io/name: nginx-ssh-reverse-proxy

|

||||

template:

|

||||

metadata:

|

||||

labels:

|

||||

app.kubernetes.io/instance: nginx-ssh-reverse-proxy

|

||||

app.kubernetes.io/name: nginx-ssh-reverse-proxy

|

||||

spec:

|

||||

containers:

|

||||

- name: proxy

|

||||

# Change to quay.io/jimmykarily/nginx-ssh-reverse-proxy-arm64:latest

|

||||

# if you are running on a RasberryPi 4

|

||||

image: quay.io/jimmykarily/nginx-ssh-reverse-proxy:latest

|

||||

command: ["/start.sh", "/proxy-config/config.json"]

|

||||

imagePullPolicy: Always

|

||||

volumeMounts:

|

||||

- name: ssh-key

|

||||

mountPath: /ssh

|

||||

- name: config-volume

|

||||

mountPath: /proxy-config/

|

||||

volumes:

|

||||

- name: ssh-key

|

||||

secret:

|

||||

secretName: jumpbox-ssh-key

|

||||

defaultMode: 0400

|

||||

- name: proxy-config

|

||||

- name: config-volume

|

||||

configMap:

|

||||

name: proxy-config

|

||||

|

||||

```

|

||||

|

||||

In a nutshell, the config above is creating a reverse SSH tunnel from the VM

|

||||

to the Pod. Inside the Pod, nginx redirects traffic to the traefik load balancer running

|

||||

on the cluster. This has the effect, that any request landing on the VM on ports 80 and 443

|

||||

will eventually reach the Traefik instance inside the cluster on ports 80 and 443.

|

||||

As a result, you can point any domain you want to the VM and it will reach the corresponding Ingress defined on your cluster.

|

||||

|

||||

{{% alert color="info" %}}

|

||||

|

||||

**NOTE:** The SSH tunnel will only bind the IP address `192.168.6.1` on the VM, which means, anyone trying to access the VM using its public IP address, will not be able to access the cluster. Only machines that can talk to `192.168.6.1` have access, in other words, machines inside the VPN.

|

||||

|

||||

{{% /alert %}}

|

||||

|

||||

#### Test the connection

|

||||

|

||||

- Try to access the cluster with the VPN IP address (should work).

|

||||

From your test peer, open `http://192.168.6.1`. You should see a 404 message from Traefik.

|

||||

You can also verify it is a response from Traefik in your cluster, by calling curl

|

||||

on the `https` endpoint (on a "default" k3s installation):

|

||||

|

||||

```bash

|

||||

$ curl -k -v https://192.168.6.1 2>&1 | grep TRAEFIK

|

||||

* subject: CN=TRAEFIK DEFAULT CERT

|

||||

* issuer: CN=TRAEFIK DEFAULT CERT

|

||||

```

|

||||

|

||||

- Try to access the cluster with domain pointing to the VPN IP address (should work)

|

||||

You can create a wildcard DNS record and point it to the VPN IP address if

|

||||

you want to make it easier for people to access the services you are running.

|

||||

E.g. by creating an A record like this: `*.mydomainhere.org -> 192.168.6.1`

|

||||

you will be able create Ingresses for your applications like:

|

||||

`app1.mydomainhere.org`, `app2.mydomainhere.org`.

|

||||

|

||||

- Try to access the cluster using the public IP address (should not work)

|

||||

|

||||

```bash

|

||||

$ curl http://1.2.3.4

|

||||

```

|

||||

This command should fail to connect to your cluster

|

||||

|

||||

|

||||

### Conclusion

|

||||

|

||||

For non-critical workloads, when 100% uptime is not a hard requirement, the solution we described allows one to use services that would otherwise cost multiple times more by hosting

|

||||

those on their own hardware. It does so, without exposing the home network to the public.

|

||||

|

||||

If you liked this solution or if you have comments, questions or recommendations for improvements, please reach out!

|

||||

|

||||

### Useful links

|

||||

|

||||

- [Kairos documentation](https://kairos.io/docs/)

|

||||

- [WireGuard documentation](https://www.wireguard.com/quickstart/)

|

||||

@ -1,23 +0,0 @@

|

||||

---

|

||||

title: "Kairos, SpectroCloud, and Canonical Collaborate to Deliver Revolutionary Telco Radio Edge Solution"

|

||||

date: 2023-03-13

|

||||

linkTitle: "Kairos, SpectroCloud, and Canonical Collaboration at MWC"

|

||||

description: |

|

||||

Kairos, the open-source distributed infrastructure platform, has collaborated with SpectroCloud and Canonical to develop a revolutionary Telco Radio Edge solution. The solution leverages the latest advances in OpenRAN automation and distributed compute management, and is set to take center stage at this year's Mobile World Congress.

|

||||

|

||||

author: Ettore Di Giacinto ([Twitter](https://twitter.com/mudler_it)) ([GitHub](https://github.com/mudler))

|

||||

---

|

||||

|

||||

Hello Kairos community!

|

||||

|

||||

We are thrilled to announce that **Kairos** has been used as a key building block for a revolutionary Telco Radio Edge solution developed in collaboration with [Spectro Cloud](https://www.spectrocloud.com/) and [Canonical](https://canonical.com/). This cutting-edge solution showcases the latest advances in OpenRAN automation and distributed compute management, and took center stage at this year's Mobile World Congress.

|

||||

|

||||

The Telco Radio Edge solution leverages the power of Kairos, Ubuntu Pro 22.04 LTS with RT kernel, and MicroK8s CAPI provider to deliver highly distributed edge node onboarding, secure deployment, and substrate provisioning. With this innovative technology stack, we’ve enabled OpenRAN o-DU service orchestration at scale, while optimizing performance, scalability, reliability, and security.

|

||||

|

||||

This is an exciting collaboration between the Kairos project, Spectro Cloud and Canonical to develop a solution that is highly performant, efficient, and scalable. The demos that have been presented at MWC showcase the advanced capabilities of the MicroK8s CAPI provider, and highlight the power of Kairos as a building block for distributed infrastructure substrates that can host even the most demanding modern OpenRAN o-DU, 5G UPF or AI/ML use-cases at scale.

|

||||

|

||||

This is a true testament to the power of open-source technologies and community collaboration, and we can't wait to see what new possibilities this partnership will bring.

|

||||

|

||||

Thank you for your continued support and enthusiasm for Kairos!

|

||||

|

||||

**Details**: You can learn more about the Talco Radio Edge solution on the Ubuntu blog https://ubuntu.com/blog/meet-canonical-at-mwc-barcelona-2023 or at https://ubuntu.com/blog/canonical-at-mwc and watch it in action here: https://www.youtube.com/watch?v=wUCSK0O8Ro4

|

||||

@ -1,9 +0,0 @@

|

||||

---

|

||||

title: "Media Section"

|

||||

date: 2023-01-04T17:17:11+01:00

|

||||

author: Mauro Morales ([Twitter](https://twitter.com/mauromrls)) ([GitHub](https://github.com/mauromorales))

|

||||

---

|

||||

|

||||

We've added a new media section so it's easy to find the different videos and articles about Kairos. To access it, go to the Documentation and at the bottom of the left menu, you will find a link called Media.

|

||||

|

||||

You can also [click here]({{< ref "/media" >}} "Media") to go check it out.

|

||||

@ -1,41 +0,0 @@

|

||||

---

|

||||

title: "Kairos release v1.5"

|

||||

date: 2023-01-27

|

||||

linkTitle: "Announcing v1.5 Kairos release"

|

||||

description: "Introducing Kairos 1.5: A Smarter, More Secure Way to Manage Your Infrastructure"

|

||||

author: Ettore Di Giacinto ([Twitter](https://twitter.com/mudler_it)) ([GitHub](https://github.com/mudler))

|

||||

---

|

||||

<h1 align="center">

|

||||

<br>

|

||||

<img width="184" alt="kairos-white-column 5bc2fe34" src="https://user-images.githubusercontent.com/2420543/215073247-96988fd1-7fcf-4877-a28d-7c5802db43ab.png">

|

||||

<br>

|

||||

<br>

|

||||

</h1>

|

||||

|

||||

We are thrilled to announce the release of Kairos version 1.5, a major update that brings significant improvements to user experience and security. With this release, we have made it even easier for you to install and set up Kairos, as well as better protect your user data. Our community has been an invaluable source of feedback, bug reports, and contributions, and we are grateful for their support.

|

||||

|

||||

You can find Kairos core images at https://github.com/kairos-io/kairos/releases/tag/v1.5.0 and images with k3s pre-bundled here: https://github.com/kairos-io/provider-kairos/releases/tag/v1.5.1.

|

||||

|

||||

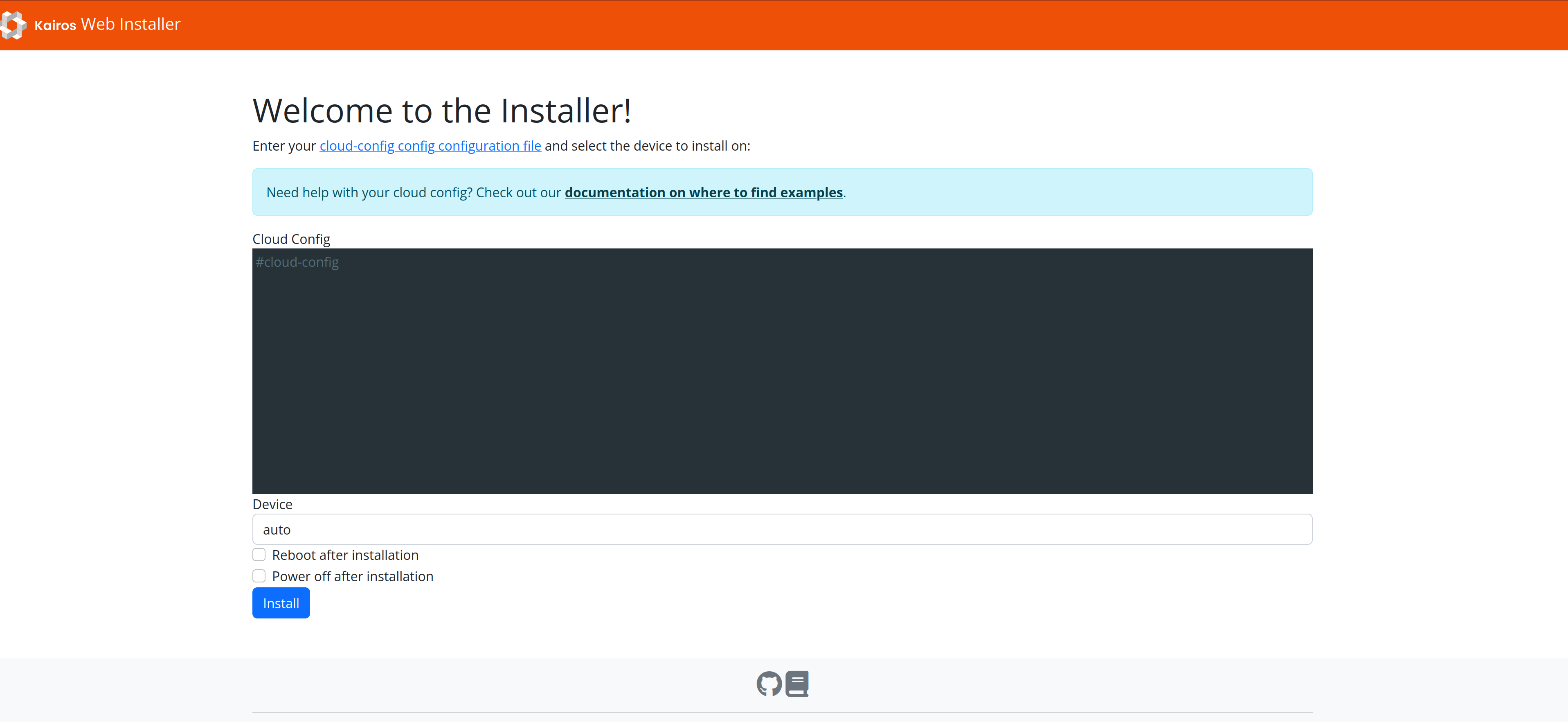

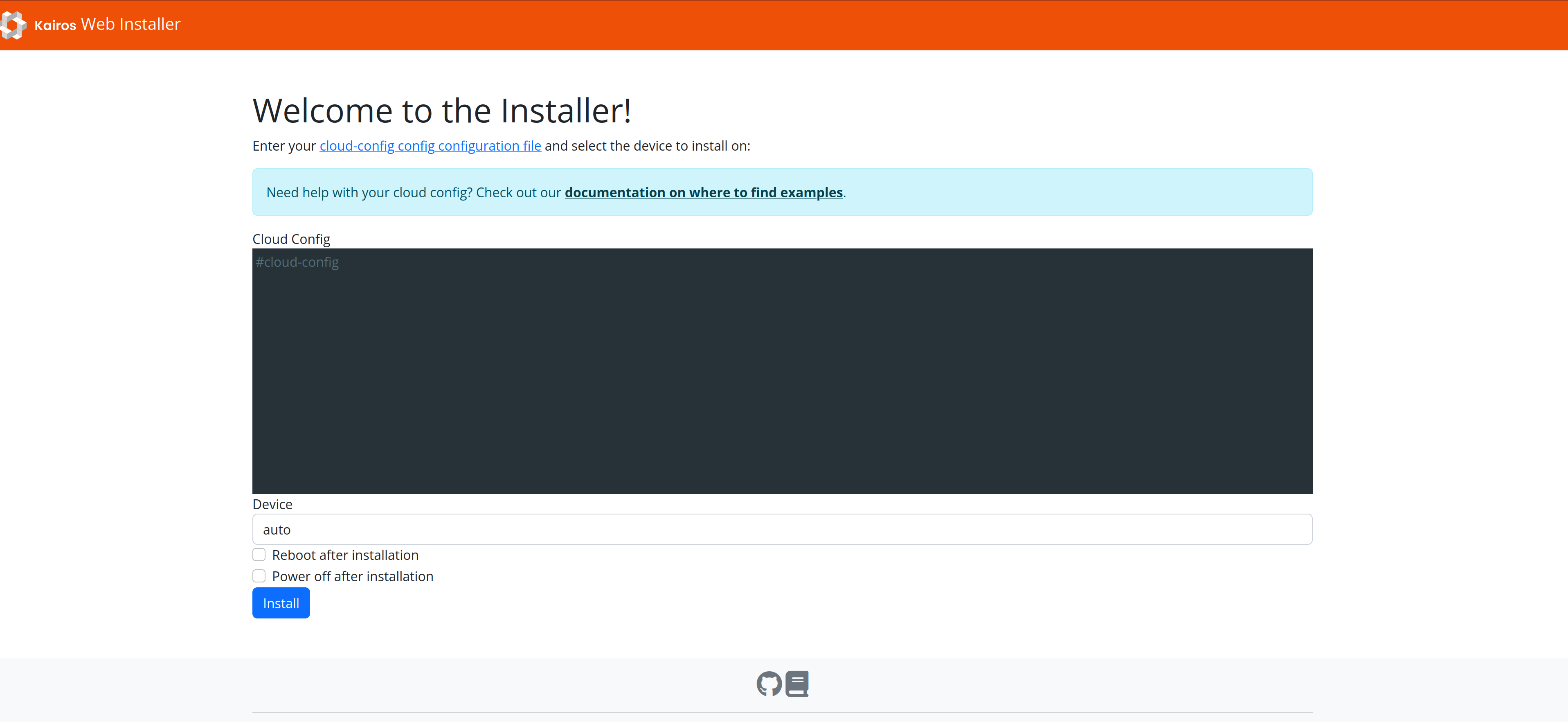

## Effortless Installation with the WebUI Installer

|

||||

|

||||

|

||||

|

||||

Gone are the days of complicated command-line instructions. With the new [WebUI installer](/docs/installation/webui/), installation and setup are a breeze. Simply follow the steps on the web page, and you'll be up and running in no time. You can also use [our core images as an installer](/docs/examples/core/). Take a look at this gif to see the WebUI installer in action:

|

||||

|

||||

|

||||

|

||||

## Protect Your Data with User Data Encryption at the Edge

|

||||

|

||||

Kairos 1.5 now allows you to encrypt your user data with ease, keeping it secure from prying eyes. Encryption is done via TPM and optionally with the Kairos KMS (Key Management Server) for external authentication and management of encrypted secrets. Check out our [documentation](/docs/advanced/partition_encryption) for more information on partition encryption.

|

||||

|

||||

## OS updates

|

||||

|

||||

We've added RockyLinux and Debian to our list of supported releases, giving you more options to run Kairos on both stable and feature-rich operating systems. We've also updated our Alpine support, so you can now run Kairos on the latest version of Alpine Linux.

|

||||

|

||||

## Extend Kairos with Custom Deployment Models (`bundles`)

|

||||

|

||||

Kairos 1.5 allows you to extend the configuration of your node with custom, container-based deployment models defined as `bundles`. Check out our [documentation](/docs/advanced/bundles) and [examples](/docs/examples/bundles) to see how to deploy `MetaLB`. `Kubevirt` and `MetalLB` bundles are also availble in the [community-bundles](https://github.com/kairos-io/community-bundles) repository.

|

||||

|

||||

---

|

||||

|

||||

For a full list of changes, see the [Changelog](https://github.com/kairos-io/kairos/releases/tag/v1.5.0). We hope you find these updates useful and as always, let us know if you have any questions or feedback. Thanks for using Kairos!

|

||||

@ -1,67 +0,0 @@

|

||||

---

|

||||

title: "Kairos release v1.6"

|

||||